Build, simulate, and deploy production grade autonomous robotics systems using Physical AI, ROS 2, and NVIDIA Isaac, validated end-to-end in simulation.

Delivered in partnership with NVIDIA · Powered by AuraSim

Robotics is shifting from rule based systems to model-driven intelligence.Most teams struggle to validate perception, navigation, and manipulation safely before deployment.This program exists to close that gap using simulation first,productionready Physical AI workflows.Robotics is shifting from rule based systems to model-driven intelligence.Most teams struggle to validate perception, navigation, and manipulation safely before deployment.This program exists to close that gap using simulation first,productionready Physical AI workflows.

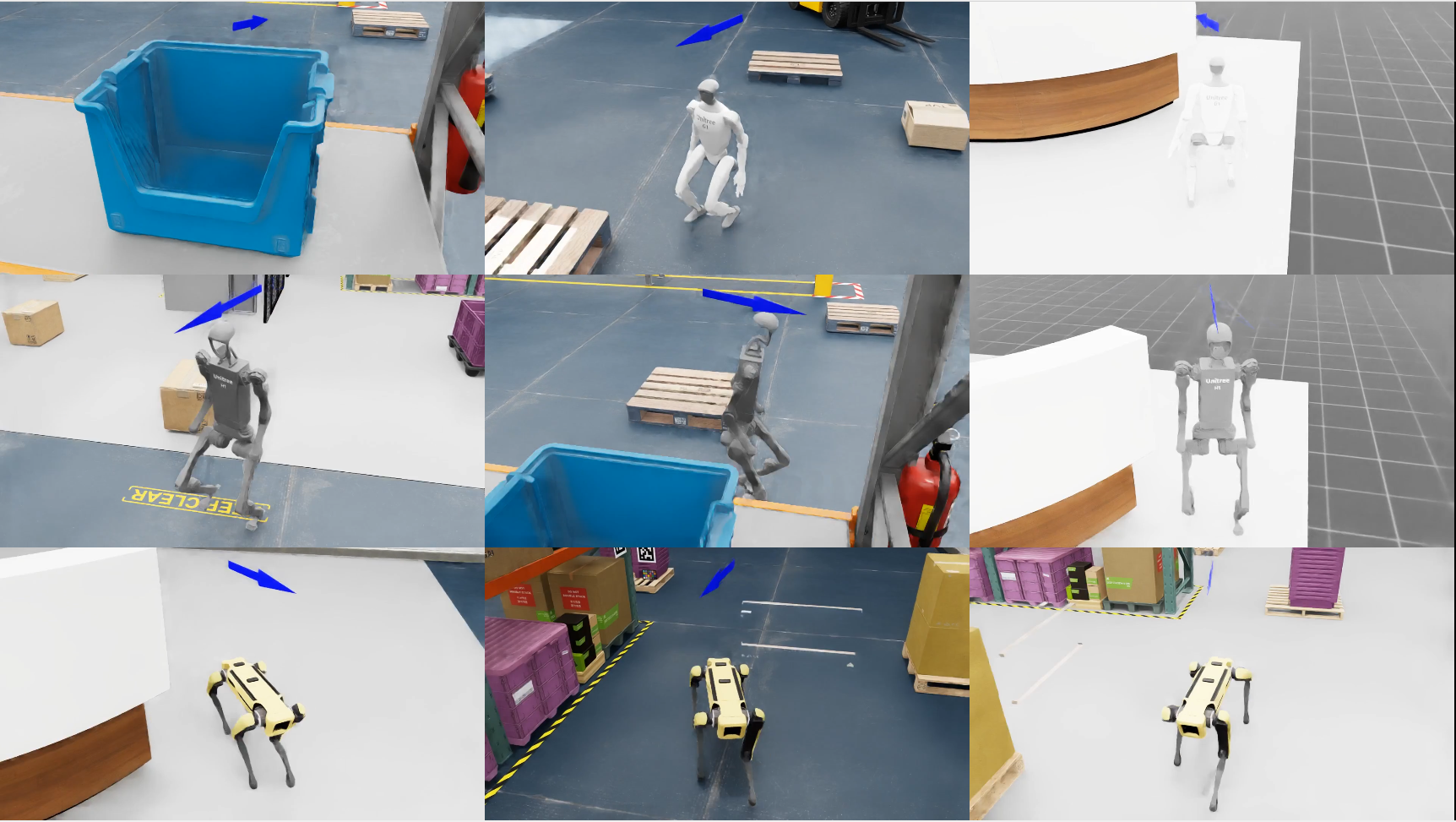

Captures spatial, physical, and contextual dynamics of complex environments.

Emulates real world inputs (e.g. cameras, LiDAR, force sensors) and hardware behavior with precision.

Stress test navigation, manipulation, and edge case logic in synthetic but realistic scenarios.

Train models, run multi agent scenarios, and iterate faster entirely in the cloud.

Bridging the gap between synthetic training and real world performance through adaptive simulation.

From idea to deployment, AuraML & Nvidia physical AI developer program lets you simulate environments, train autonomy, and validate robotic systems virtually with unprecedented speed and precision.

connects your robotics stack with a generative simulation engine that accelerates development across perception, planning, and control.

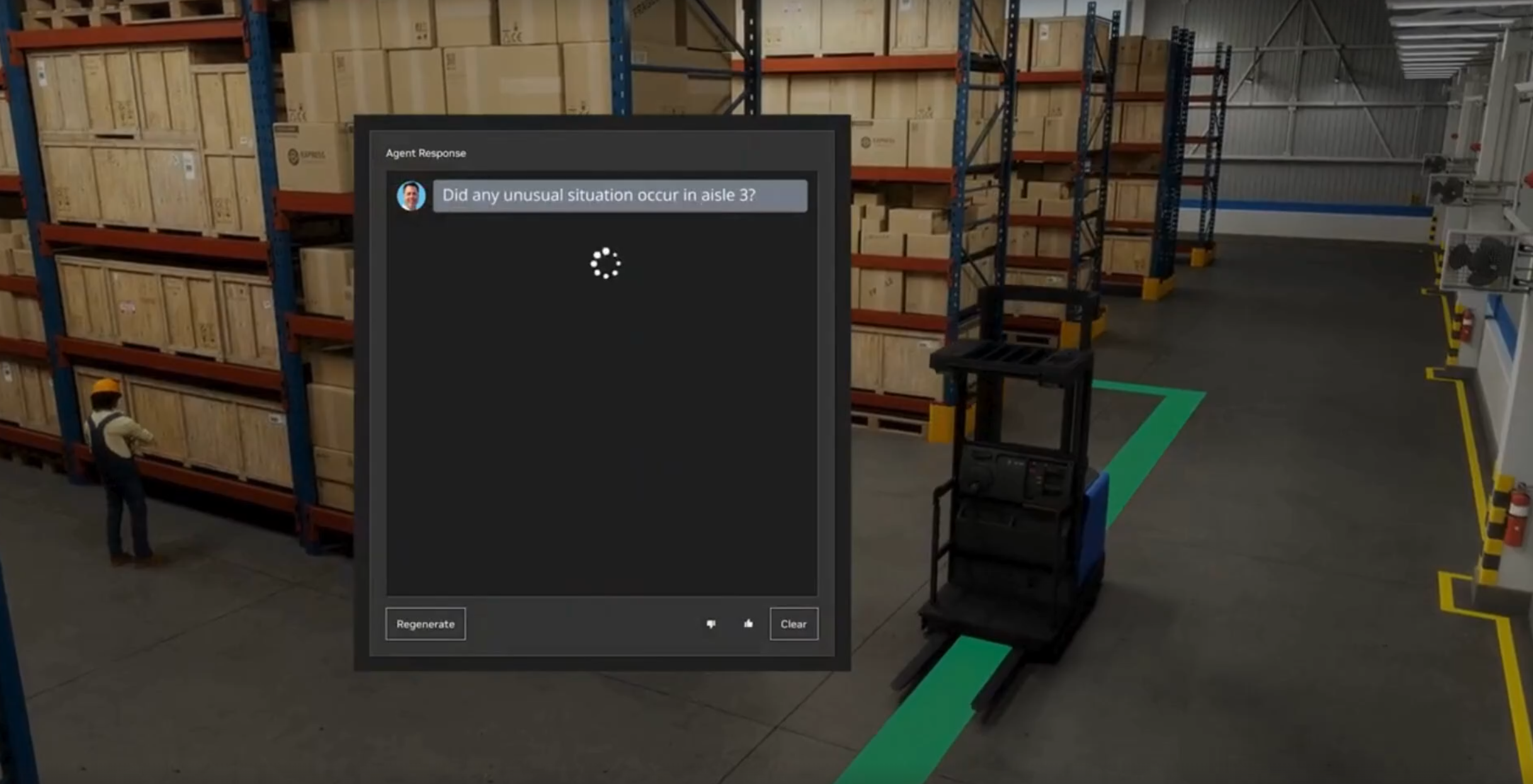

Digitally recreate your environment from warehouses to assembly lines using natural language or floorplans, ready for instant simulation.

Simulate diverse real-world scenarios and edge cases to train, test, and validate navigation, manipulation, and safety systems.

Iterate faster with sensor-accurate feedback and cloud-based validation workflows. Move from sim to site in a fraction of the time.

Seamlessly transfer learnings from simulation to real-world systems. Reduce on-site calibration and deployment risks with high-fidelity modeling.

Digitally recreate your environment from warehouses to assembly lines using natural language or floorplans, ready for instant simulation.

Simulate diverse real-world scenarios and edge cases to train, test, and validate navigation, manipulation, and safety systems.

Iterate faster with sensor-accurate feedback and cloud-based validation workflows. Move from sim to site in a fraction of the time.

Seamlessly transfer learnings from simulation to real-world systems. Reduce on-site calibration and deployment risks with high-fidelity modeling.

Focus: System‑level understanding

Topics

1. What is Physical AI (vs classical robotics)

2. Modern robotics stack (simulation → data → policy → deployment)

3. Where AuraSim, Isaac Sim, Omniverse, ROS 2 fit

4. Why simulation is the bottleneck in robotics

Hands on:

1. AuraSim environment walkthrough

2. Generating first industrial scene (factory / warehouse)

Outcome: Clear mental model of end‑to‑end robotics systems

Focus: Generative robotics environments

Topics:

1. USD fundamentals

2. Omniverse scene graph & physics

3. AuraSim generative assets & worlds

4. Domain randomization for robotics

Hands on

Generate multiple factory layouts

Import scenes into Isaac Sim

Outcome: Simulation ready robotics environments

Focus: Making robots move correctly

Topics

1. Robot types: arms, cobots, AMRs

2. URDF/USD robots

3. Forward & inverse kinematics

4. Joint limits, constraints, controllers

Hands on

1. Import industrial arm & mobile robot

2. Validate kinematics in Isaac Sim

Outcome: Robots with correct motion & control

Focus: How robots see

Topics

1. Camera models (RGB, depth, stereo)

2. LiDAR & radar simulation

3. Proprioception & force/torque sensors

4. Synthetic data generation

Hands on

1. Configure multi sensor robot

2. Generate labeled perception datasets using AuraSim

Outcome: Sensor‑accurate perception pipelines

Focus: Industry middleware & data flow

Topics

1. ROS 2 architecture (nodes, topics, DDS)

2. Isaac Sim ↔ ROS 2 bridge

3. TF trees & coordinate frames

4. Sensor fusion basics (vision + LiDAR)

Hands on

1. ROS 2 integration with simulated robot

2. Fuse camera + LiDAR data for localization

Outcome: ROS‑native robotics systems

Focus: Autonomy for moving robots

Topics

1. Localization & mapping (SLAM concepts)

2. Navigation stacks

3. Obstacle avoidance

4. Simulation‑based stress testing

Hands on

1. AMR navigation in generated warehouse

2. Multi‑scenario testing via AuraSim

Outcome: Robust navigation pipelines

Focus: Physical interaction

Topics

1. Grasping fundamentals

2. Motion planning

3. Vision‑guided manipulation

4. Reachability & collision analysis

Hands on

1. Pick‑and‑place task in factory cell

2. Vision‑driven manipulation

Outcome: Industrial‑grade manipulation skills

Focus: Intelligence & real‑world readiness

Topics

1. Groot & embodied foundation models

2. Vision‑Language‑Action (VLA) concepts

3. Training a mini VLA model

4. Sim‑to‑real gaps & validation

Hands on

1. Train mini VLA using synthetic data

2. Validate behavior across scenarios

Outcome: Intelligent, adaptable robot behaviors

Options

1. Industrial arm with vision guided manipulation

2. AMR with autonomous navigation

3. Physical AI robot responding to language commands

Requirements

1. AuraSim generated world

2. Isaac Sim validation

3. ROS 2 integration

4. Sensor fusion (minimum two sensors)

5. Final demo + technical report

Outcome: Portfolio grade, production oriented robotics project

Participants finish with a portfolio grade, production ready robotics system.

Run thousands of parallel simulations on the cloud, scale your training pipeline, and access via web or API.

AI engineers entering robotics, Robotics & automation engineers

ROS developers, Omniverse / Isaac users

Research labs and universities advancing robotics AI

Industrial teams needing fast, realistic simulation for training & testing

Early Access to AuraSIM: Get hands on with unreleased capabilities and influence how new features evolve.

Dedicated Support & Onboarding: Work closely with our engineering team to integrate AuraSIM & Nvidia Stack into your existing stack.

Joint R&D Opportunities: Co-develop custom scenarios, sensors, or interfaces tailored to your domain.

Showcase & Visibility: Be featured as a design partner in case studies, demos, and global conferences.

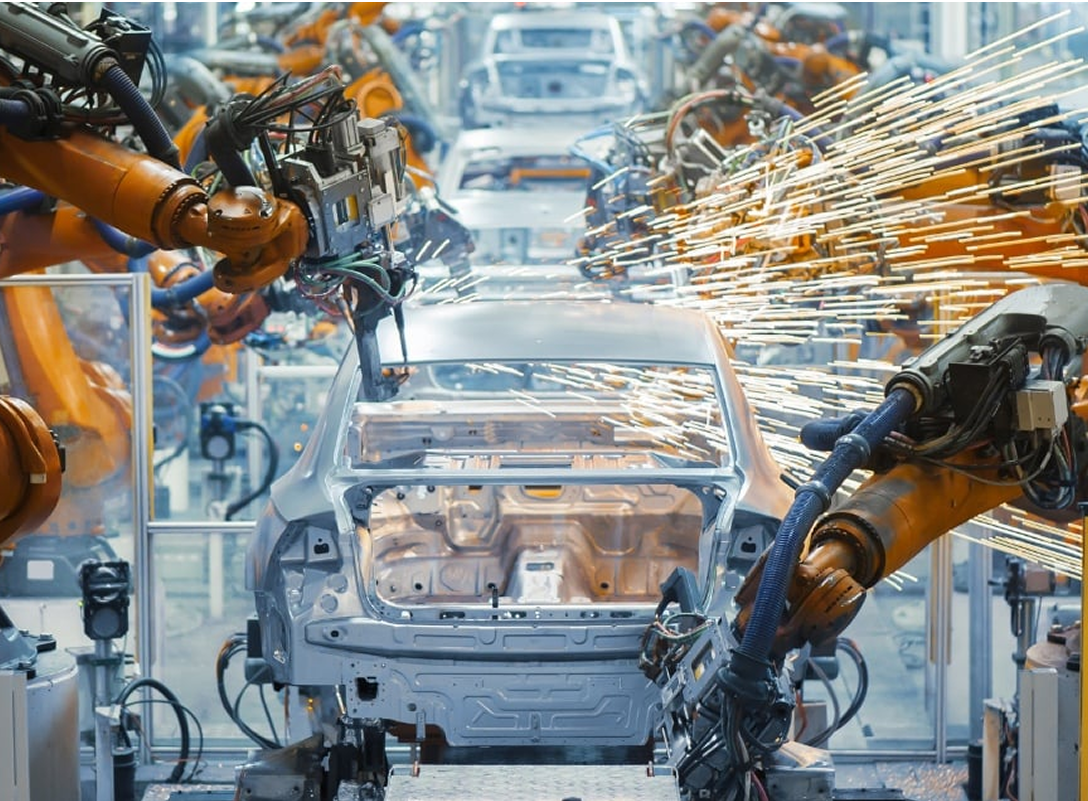

Robotic Arms (welding, assembly)

SCARA & Delta Robots (pick-and-place)

CNC-Integrated Bots

Mobile Manipulators

Validate assembly workflows

Train in precise, multi step operations

Run HRI and fault-handling scenarios

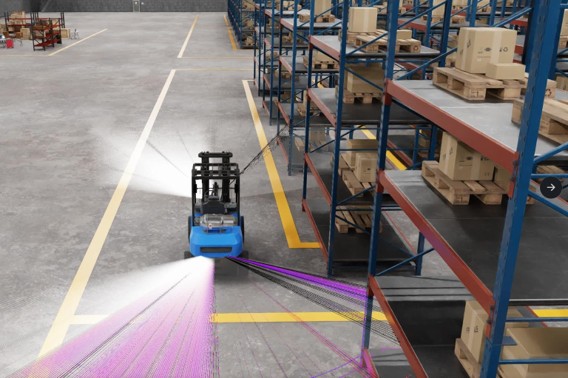

AMRs & AGVs

Sorting Robots

Pick and Place Vision Bots

Palletizers

Optimize fleet coordination

Test last meter navigation

Run HRI and fault handling scenarios

UGVs and UAVs

Recon Drones

Surveillance Crawlers

Tethered Inspection Units

Test in tactical or unstructured terrain

Validate mission logic

Train multi agent recon and AI perception

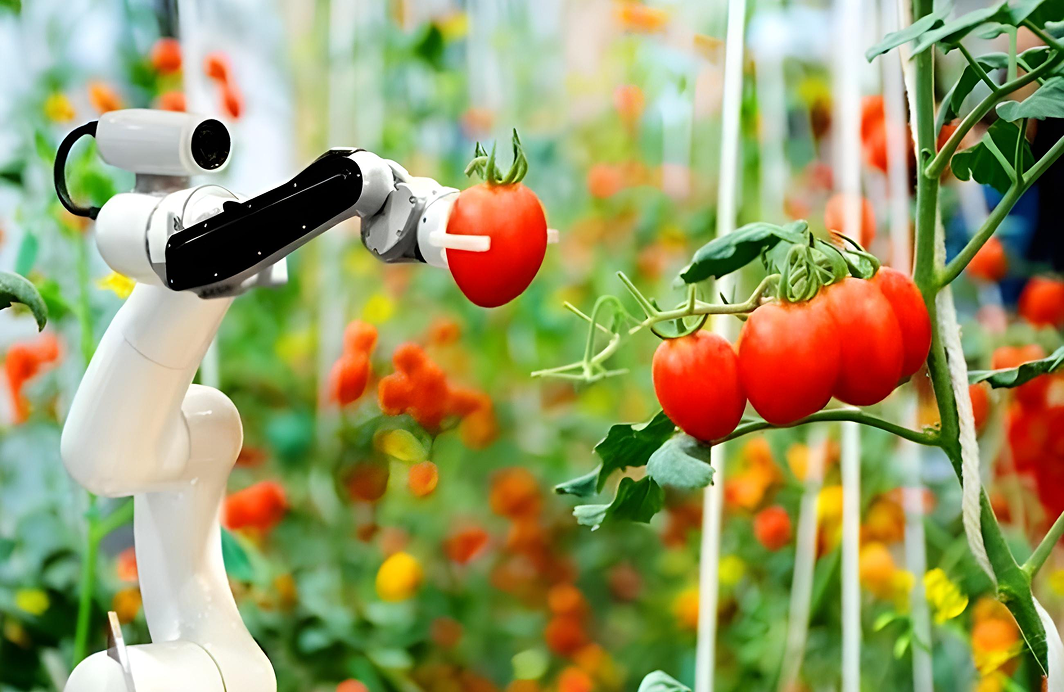

Harvesting Robots

Autonomous Tractors

Drones for crop monitoring

Weeding/irrigation platforms

Simulate terrain and crop variability

Train plant health monitoring AI

Precision farming scenario testing

Pipeline Crawlers

Substation Maintenance Bots

Wind Turbine Inspectors

Underwater ROVs

Wall Climbing Robots

Hazard modeling (heat, wind, pressure)

Validate remote maintenance operations

Train vision and control in extreme environments

It is a live, hands on developer program focused on building production-grade robotics systems using Physical AI, ROS 2, AuraSim, and NVIDIA Isaac.The program emphasizes simulation-first development, system validation, and deployment-ready workflows.

This is a live, cohort based program. Sessions are instructor-led with real-time walkthroughs, hands-on labs, and weekly practical assignments.

By the end of the program, you will have built and validated:

All work is validated using AuraSim and NVIDIA Isaac Sim.

Basic familiarity with robotics concepts (frames, sensors, joints) and Python is recommended.Prior experience with ROS, Isaac, or Omniverse is helpful but not mandatory.

No. All participants receive access to an end-to-end managed NVIDIA GPU cloud environment. You only need a stable internet connection and a modern laptop.

The program uses: AuraSim, NVIDIA Omniverse, NVIDIA Isaac Sim & Isaac ROS, ROS 2, PyTorch, Open-source Physical AI and VLA models.

AuraSim is AuraML’s generative, sensor-accurate simulation platform.

It is used to: Generate realistic environments, Validate perception, navigation, and manipulation, Perform sim-to-real testing before deployment, Participants receive free AuraSim access during the program.

Duration: 8 - 12 weeks

Sessions: 2 - 3 live sessions per week

Time commitment: ~5 - 7 hours per week (including labs and assignments)

Participants who successfully complete the program receive: AuraML + NVIDIA Co-Certified Physical AI & Robotics Developer Certificate

Verifiable credential ID

LinkedIn-ready certification badge

Yes. The program is delivered in partnership with NVIDIA, using NVIDIA Omniverse, Isaac Sim, Isaac ROS, and NVIDIA GPU powered cloud infrastructure.

Yes. The program is designed around industry aligned tooling and workflows used by real robotics teams, making it relevant for roles in:

The fee includes live instruction, cloud access, AuraSim access during the program, and joint certification.

Each cohort is limited to 150 engineers to ensure high-quality interaction and hands-on support.

Limited to 150 engineers per batch to ensure high quality interaction and hands-on support.